I graduated from University of Pune, India with a Bachelor of Engineering degree majoring in Computer Engineering in 2013. I wanted to join Indian Army just like my father. I even appeared for my SSB in Bhopal, however I couldn’t clear it. After realizing I don’t have many options left to go for SSB again (it takes place once a year), I ended up enrolling myself in an Engineering college in Pune, Maharashtra (also called as Oxford of the East). Those four years of my life turned out to be most fruitful and engaging, which paved the path for my future. During those years I became self dependent, learned about computers, programming languages and made some great friends. During those years I also got introduced to C, C++, Python, SQL, and Databases. I was never interested in Java primarily which colleges used to teach in those days, therefore after Engineering when I moved back to New Delhi I started looking out for roles which are in the Data space or Networking space (I used to think Networking is an easy field).

I’m glad to have this platform to share my Data Journey with you all, how I entered the field and where I foresee myself right now. My professional journey started with AON.

AON

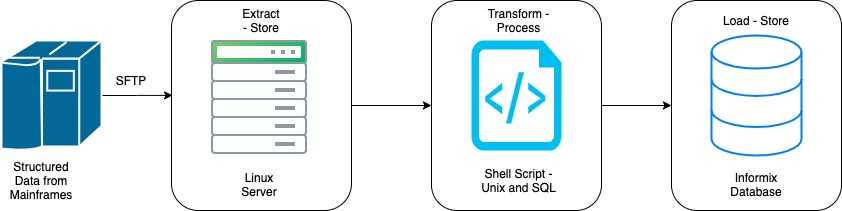

I started my career back in January’ 2014 with AON as a Software Engineer in Gurugram, India. I joined the Business Intelligence team which was responsible for managing Data Warehouse systems for our businesses. That's the place where I started this long haul journey and got introduced to the Black Box - Linux Terminals. I was managing a Legacy Data Warehouse (LDW) system running on Unix and storing data into good old IBM Informix databases. As we see, it’s a simple ETL workflow wherein we used to process incoming data from source systems (Mainframes) coming in the form of Flat files using Unix & SQL and used to store the transformed data into Informix. For reporting we used IBM Cognos and have also created a generic CSV reporting system which reads the data from Informix, stores it as a CSV and sends it to our stakeholders. Back then I used BMC Software Control-M as our workflow orchestration tool. The ETL workflow I worked on looked something like below -

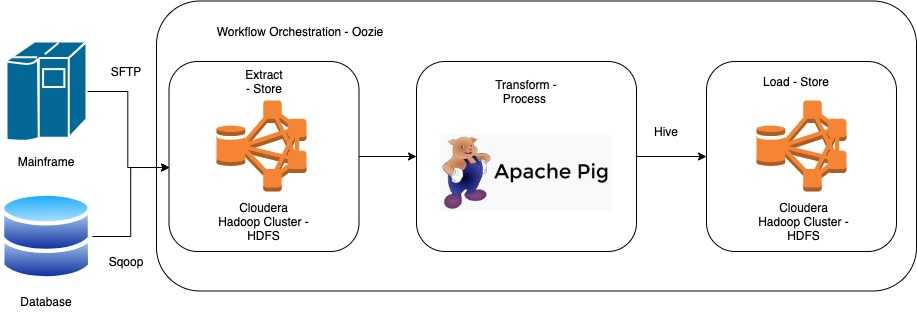

After managing the LDW platform and writing multiple shell scripts for ETL I kicked off my Hadoop journey. Burdened by Informix costs, outdated technology led us to take a decision to replace LDW with a Big Data Platform powered by Cloudera Distribution Hub (CDH). Till date this project is the most exciting assignment I worked on. We decided to use Pig Latin to rewrite all those shell scripts and start running them on Cloudera. This decision resulted in changing our workflow a lot, wherein we stored our source data into HDFS (Discovery Layer), processed it using Pig Latin and stored it back into HDFS (Landing Zone). We created multiple external and internal Hive tables on top of the processed data. IBM Cognos reports were configured to read data from Hive tables and our custom CSV reporting process also uses Hive to generate the reports. We used Oozie as our workflow scheduler tool which comes as part of CDH. The final result, our ETL process transformed to look something like below -

This particular project gave me many great insights into Hadoop Technologies like HDFS, Hive, Sqoop, Impala, Beeline, Oozie, HUE, Yarn, MR etc. During my last few months at AON I also worked on multiple Hadoop POCs including HDFS Snapshots, Hive on Spark, Hive on Tez etc to further expand my horizon about the Hadoop ecosystem.

EXL

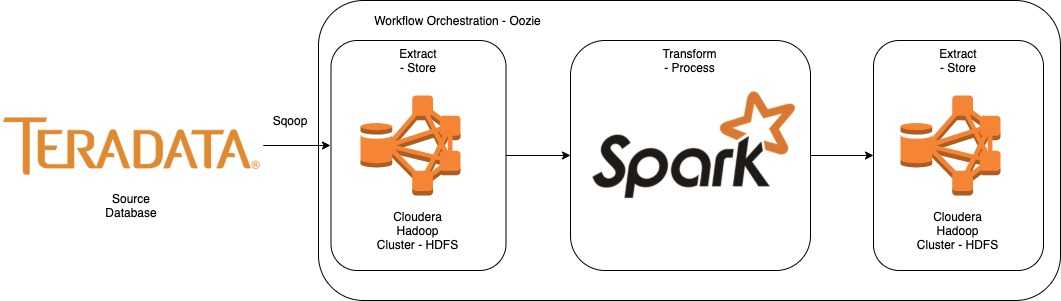

Post AON I joined a relatively smaller firm, EXL as a Business Analyst. I had an offer from Bank of America as well, which made the decision quite difficult to take, however at the end I decided to join EXL. EXL operates in multiple markets and is a leader in the Analytics space by providing IT Services and Solutions to its vast clientele across regions in US, EMEA, and APAC. My client was a major British bank and I was hired to help them spearhead their Big Data adoption on Cloudera platform. Similar to AON, the bank also decided to move to Hadoop to reduce their burdening cost of SAS platform. During my short tenure with EXL I worked on migrating existing SAS Data Warehouse onto Cloudera Big Data Platform. My responsibilities were primarily designing Incremental data load procedures from Teradata and historical data movement from SAS to HDFS. Apart from this, I was actively involved in rewriting multiple SAS codes into Apache Spark using PySpark. After working on Hadoop for about 3 years in AON, I first got introduced to Spark and believe me I got intrigued straightaway. By the time I left AON, Apache Spark had started gaining traction. Many companies out there who haven’t started their Big Data journey yet straightaway choose to use Spark due to its In-Memory computation capabilities and faster processing power compared to Hadoop. The ETL process on which I worked looked something like below -

This particular project gave me some great insights into Apache Spark wherein I got to work with PySpark extensively. I also got a chance to work with SAS, Teradata and the existing Hadoop ecosystem of HDFS, Hive, Sqoop, Impala, Beeline, Oozie, HUE, Yarn etc.

RBS

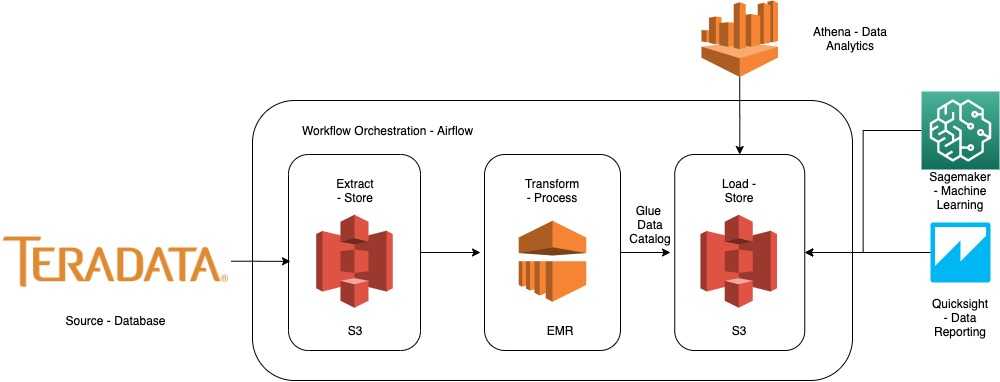

After EXL I joined RBS as a Software Engineer. Joining RBS was like a dream come true for me. I still remember, after engineering I couldn’t even apply to it because I’m not from an IIT or a Tier 1/ Tier 2 college in India. Due to these reasons certainly I was very excited to work here. In RBS my project was similar to that of the other British bank I worked with in EXL. I was tasked to migrate an existing SAS Data Warehouse onto a Big Data platform, however instead of using Cloudera we chose AWS Public Cloud. RBS was the place wherein I got introduced to Cloud Platforms and believe me I’m still hooked to it. I believe the emergence of Cloud companies like AWS, Azure, GCP changed the entire landscape of how organizations manage their data centers, data, infrastructure, platform, and services. I did multiple POC’s on AWS services which paved the path for us to choose the right technology for our use case. The technologies which we finally zeroed on at the end were Amazon EMR for Data Engineering, Amazon Athena for Data Analytics, Amazon Sagemaker for Data Science & Machine Learning, Amazon QuickSight for Reporting and lastly S3 for Data Storage. These are some key Analytics tech stack from AWS suite which helped us in our Cloud journey. Also, we selected Apache Airflow as our workflow orchestrator after careful consideration and POC. Finally our Data Platform looked something like this -

My work and experience at RBS helped me to grab my next assignment in Singapore, where I started my Data Science journey.

GRAB

Grab is the place which gave me an opportunity to move abroad. I believe if you possess the right skills, no one can stop you from realizing your dream. I’m still greatly thankful to my manager, my teammates who interviewed me and our Head of Data Science at Grab, who foresee some value in me because of which they offered me the role and further waited 4 months (1 month work visa process + 3 months notice) for me to join. After moving to Singapore, life has changed completely. Singapore is like a dream, everything is so perfect here, which sometimes feels like a distant reality. I joined Grab as a Data Scientist - Architecture.

Now you must be wondering what exactly is Architecture in my title. It’s actually the team I joined under Data Science org. Now, the next question that should arise in your mind should be what I do in this team? Well my team manages Machine Learning Platform for Data Science on AWS. I support our Spark compute platform on Kubernetes, our Jupyter platform which DS uses to build their notebooks, Airflow and Kubeflow instances to schedule the data and model pipelines etc. My prior experience in Data Engineering and AWS helped me a lot in getting this role. On a daily basis I work on Kubernetes, Spark, Terraform, DevOps, Cloud to support our platform. Working at Grab has been a roller coaster ride for me. I got introduced to so many technologies at such a rapid pace that it took me months to understand them all. I have worked here on Mesos, Kubernetes, Docker, Kubeflow, MLFlow, Spark, Airflow, Datadog, ELK, Jupyter, AWS, Azure, Databricks, Terraform etc. There were technologies with which I never worked in my life before, and got a chance to support them here. I would say, Grab took me out of my comfort zone and bombarded me with knowledge which sometimes became too overwhelming for me to grasp at once. There are still some technologies I never got a chance to work with or learn which includes Kafka and Flink primarily.

Currently I work as a Data Science Lead and manage our Azure ML Platform. Yes, you read it right - we use both AWS and Azure cloud platforms and I got a chance to support them both in production. The scale at which we operate is immense. It’s the biggest infrastructure, and data lake that I ever worked with, which makes the role more challenging and fruitful at the end. Using the existing platforms our Data Scientists and Analysts solve complex business problems related to our business, which in turn helps drive us forward and increase customer adoption.

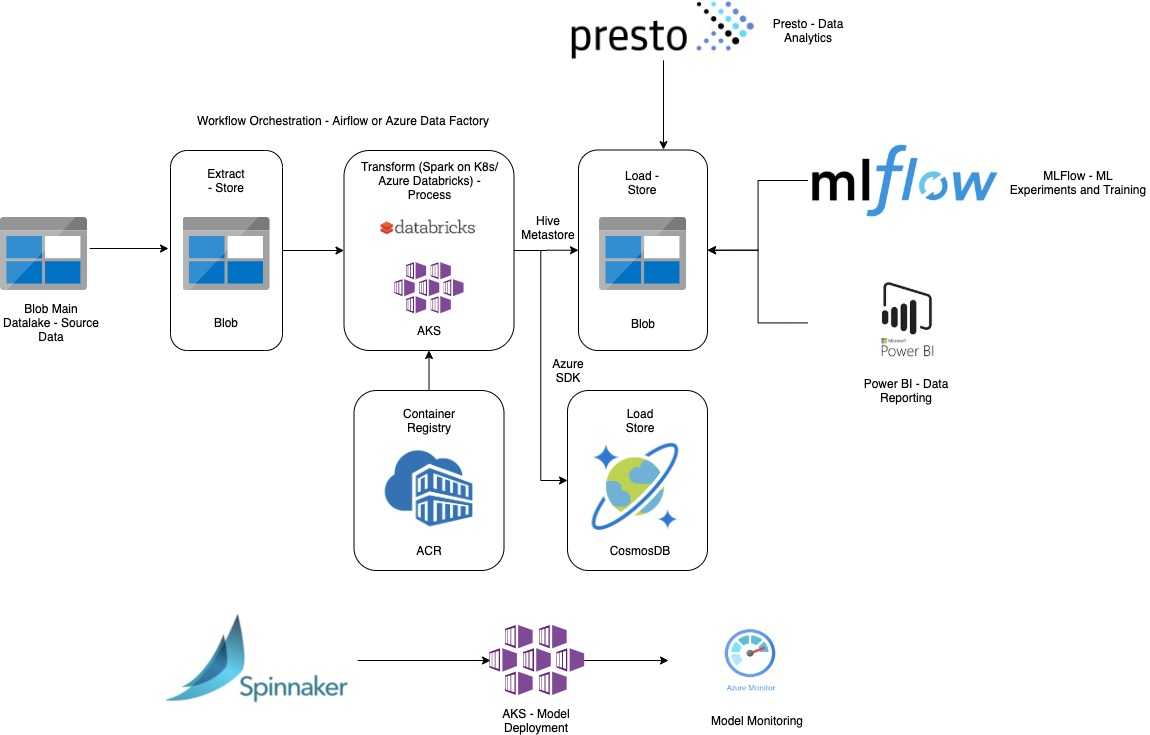

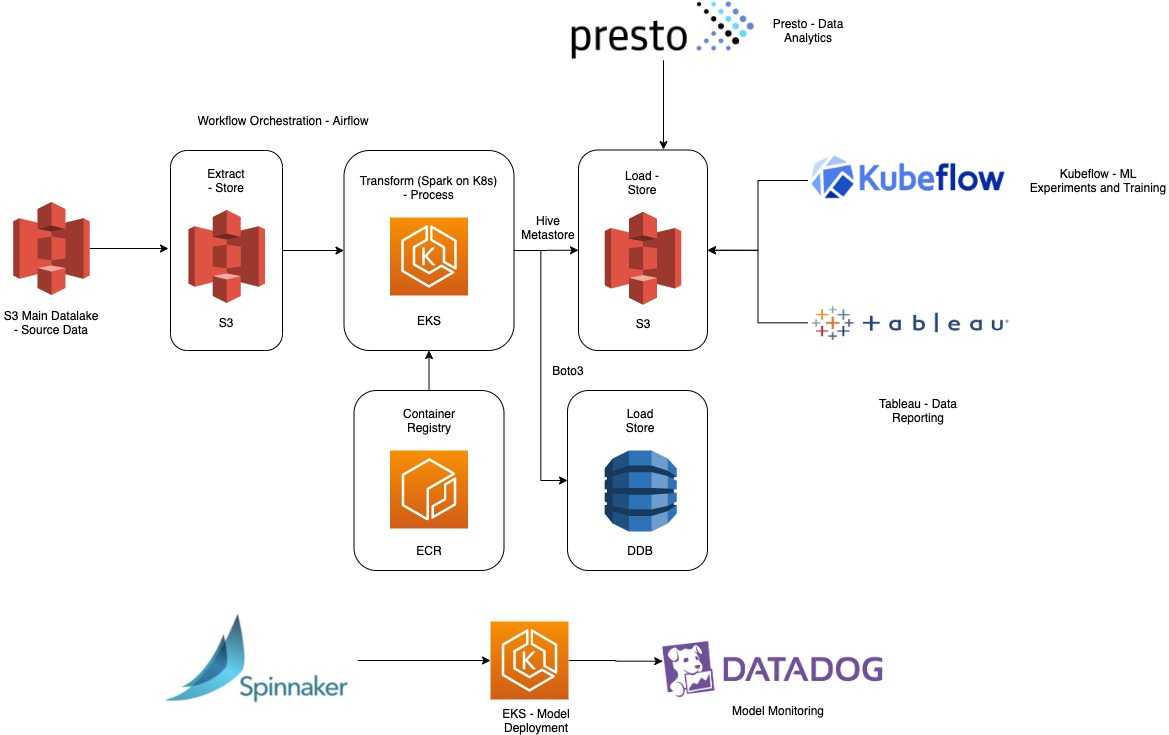

Grab has given me a chance to enter the realm of Data Science and help our fellow Grabbers in making a difference in the lives of our customers (Passengers, Drivers and Merchants). The ML Platforms I helped design look something similar as below -

Azure ML Platform

AWS ML Platform

At the end, I would like to say I thoroughly enjoyed my last 2 years at Grab and soon I’ll be moving on to my next assignment. Until then, stay strong and stay safe. Thanks for taking your time to read this long and exhaustive article. I hope I’m able to share something which is knowledgeable and help you gain an insight into how all these technologies fit together.